-

- News

- Books

Featured Books

- pcb007 Magazine

Latest Issues

Current Issue

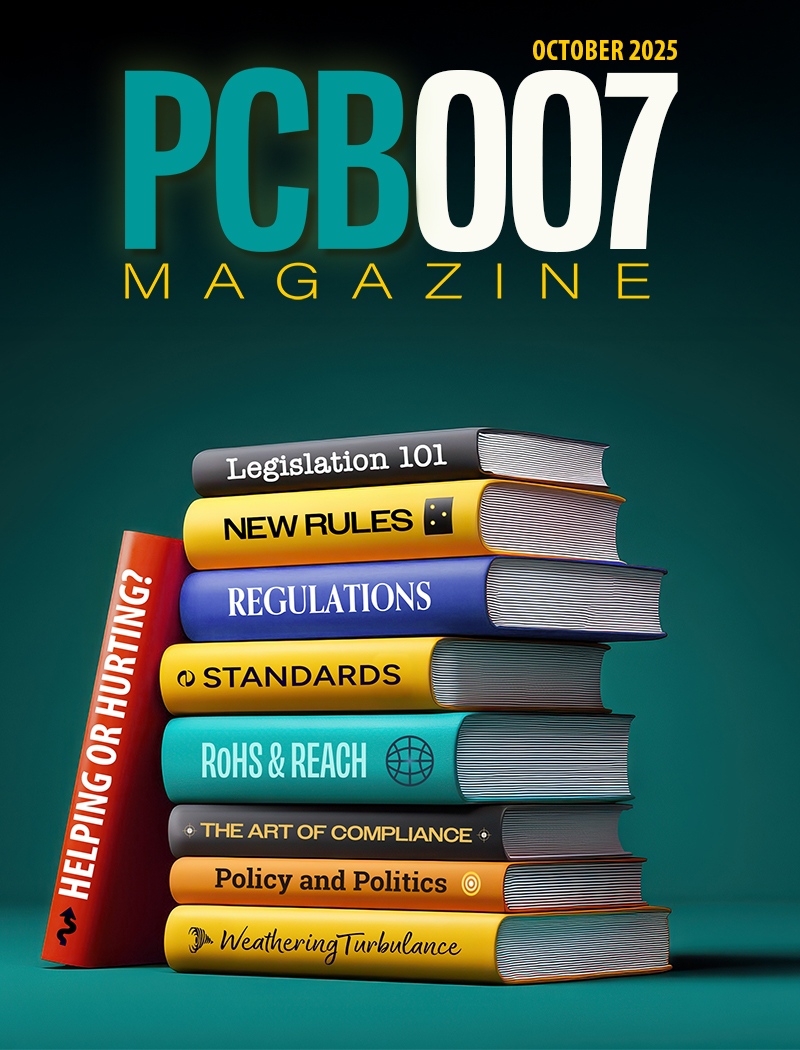

The Legislative Outlook: Helping or Hurting?

This month, we examine the rules and laws shaping the current global business landscape and how these factors may open some doors but may also complicate business operations, making profitability more challenging.

Advancing the Advanced Materials Discussion

Moore’s Law is no more, and the advanced material solutions to grapple with this reality are surprising, stunning, and perhaps a bit daunting. Buckle up for a dive into advanced materials and a glimpse into the next chapters of electronics manufacturing.

Inventing the Future With SEL

Two years after launching its state-of-the-art PCB facility, SEL shares lessons in vision, execution, and innovation, plus insights from industry icons and technology leaders shaping the future of PCB fabrication.

- Articles

- Columns

- Links

- Media kit

||| MENU - pcb007 Magazine

In-Memory Computing: Revolutionizing Data Processing for the Modern Era

April 21, 2025 | Persistence Market ResearchEstimated reading time: 5 minutes

In a world where milliseconds matter, traditional computing architectures often struggle to keep up with the massive influx of real-time data. In-memory computing has emerged as a transformative force, enabling businesses to process information at unprecedented speeds by storing data directly in RAM instead of relying on slower disk-based storage.

By minimizing latency and dramatically boosting performance, in-memory computing enables organizations to respond faster, make more informed decisions, and deliver a better user experience. It’s not just an upgrade—it’s a reimagining of how modern computing systems should perform.

The global in-memory computing (IMC) market is expected to grow significantly, rising from US$ 23.7 billion in 2025 to US$ 72.4 billion by 2032, at a CAGR of 17.3% according to the persistence market research. This surge is driven by growing demands for real-time analytics and large-scale data processing across industries, fueled by mobile banking, digital payments, and national digitization efforts like UID initiatives. North America is projected to hold a 27.3% market share in 2025, with IMC solutions accounting for 57.3% due to their speed and big data capabilities. Risk management and fraud detection applications are set to capture 26.4% of the market amid rising cyber threats, while adoption of in-memory graph databases is accelerating for real-time fraud detection and social analytics.

From Bottlenecks to Breakthroughs: Tackling Data Challenges Head-On

Conventional systems often create bottlenecks due to limited disk I/O speeds. As data volumes skyrocket with the rise of IoT, AI, and big data analytics, these limitations have become glaringly apparent. In-memory computing tackles this challenge head-on by eliminating the need to repeatedly read from and write to disk.

The result? Drastically improved throughput and system responsiveness. For businesses relying on real-time analytics, fraud detection, or high-frequency trading, this shift can mean the difference between staying ahead or falling behind. It unlocks the full potential of modern CPUs by feeding them data at the rate they were designed to handle.

The Engine Behind Real-Time Analytics

The speed of in-memory computing allows for instant insights, making it ideal for real-time analytics. Instead of waiting minutes—or even hours—for results from disk-bound systems, users can now run complex queries across massive datasets and receive answers almost instantaneously.

This capability is crucial for sectors like finance, e-commerce, healthcare, and telecommunications. For example, in e-commerce, real-time recommendation engines powered by in-memory data grids help personalize the customer experience on the fly. In healthcare, immediate access to patient data can be a matter of life and death. With in-memory computing, the lag is gone, and instant insight becomes the norm.

Architecting for the Future: A New Computing Paradigm

In-memory computing represents more than just a speed boost—it’s a foundational change in how systems are architected. By integrating in-memory data grids and in-memory databases, organizations can build scalable, distributed systems that are both resilient and fast.

This new architecture supports high availability, fault tolerance, and horizontal scaling. As cloud computing and hybrid environments become more prevalent, in-memory platforms can span across on-premises and cloud infrastructures, ensuring consistency and performance no matter where the data resides.

Moreover, the decoupling of compute and storage traditionally found in cloud environments is reshaped by in-memory solutions, which bring data closer to the compute layer—maximizing efficiency and enabling new levels of real-time application performance.

Blending Memory and Intelligence: AI Meets In-Memory Computing

As artificial intelligence and machine learning become embedded in business operations, they demand faster access to ever-growing data sets. Training models and making real-time inferences require rapid, continuous data flow—something in-memory computing delivers with ease.

In-memory platforms can process streaming data in real time, enabling AI systems to learn and adapt on the go. Whether it’s adjusting supply chain logistics, detecting fraudulent activity, or powering autonomous systems, the marriage of in-memory computing with AI opens doors to smarter, faster decision-making.

Cost vs. Performance: Is In-Memory Worth the Investment?

Historically, RAM was considered too expensive to be used at scale. But that’s changing. The cost of memory has dropped significantly in recent years, making large-scale in-memory deployments more accessible than ever.

When considering the cost-benefit analysis, organizations must factor in not only the price of the hardware but also the immense value delivered through performance gains, improved customer experience, and faster time to insight. In many scenarios, the return on investment becomes clear as in-memory systems replace or augment existing infrastructures that are too slow to meet modern demands.

Industries Leading the Charge

Several industries have already embraced in-memory computing with impressive results. Financial services firms use it for algorithmic trading and fraud detection, where speed is crucial. Retailers implement it to power dynamic pricing engines and real-time customer analytics.

Telecommunications providers use in-memory platforms to manage massive amounts of customer data and ensure seamless call routing and service delivery. Even sectors like logistics and manufacturing are finding in-memory computing invaluable for real-time tracking, inventory management, and predictive maintenance.

The common thread? Wherever real-time action, analysis, and agility are needed, in-memory computing steps in as the solution.

Challenges and Considerations on the Path to Adoption

Despite its advantages, adopting in-memory computing isn’t without challenges. Transitioning from legacy systems requires careful planning, especially in terms of data migration and integration with existing applications.

Additionally, data durability must be addressed. Since RAM is volatile, solutions must include mechanisms like persistent memory, backups, or replication strategies to ensure data isn’t lost during outages. Security also becomes a key consideration, as faster data access must be matched with robust protection protocols.

Organizations must weigh these factors and work with experienced partners or platforms to ensure a smooth implementation.

The Road Ahead: A Future Powered by Memory

As data continues to explode in volume and importance, the need for faster, smarter computing will only grow. In-memory computing is uniquely positioned to be at the center of this evolution, empowering applications that demand real-time intelligence and immediate responsiveness.

With advancements in memory technology, such as persistent memory and memory-centric architectures, the line between storage and processing continues to blur. The future isn’t just about computing faster—it’s about computing smarter. And with in-memory computing, that future is already underway.

Final Thoughts

In-memory computing isn’t just a trend—it’s a technological revolution that’s reshaping the landscape of data-driven business. By eliminating traditional bottlenecks and bringing computation closer to the data, it unlocks a new level of performance that’s essential for modern applications.

Whether you're looking to boost analytics, power AI, or simply make your systems more responsive, embracing in-memory computing might just be the smartest move your organization makes this decade according to the persistence market research.

Testimonial

"In a year when every marketing dollar mattered, I chose to keep I-Connect007 in our 2025 plan. Their commitment to high-quality, insightful content aligns with Koh Young’s values and helps readers navigate a changing industry. "

Brent Fischthal - Koh YoungSuggested Items

Compal Showcases Comprehensive Data Center Solutions at 2025 OCP Global Summit

10/16/2025 | Compal Electronics Inc.Global data centers are facing new challenges driven by AI – greater compute demand, larger working sets, and more stringent energy efficiency requirements. At this year’s OCP Global Summit, Compal Electronics presented a comprehensive vision for the data center of the future, delivering end-to-end solutions that cover compute, memory, and cooling.

Unique Active Memory Computer Purpose-built for AI Science Applications

08/26/2025 | PNNLWith the particular needs of scientists and engineers in mind, researchers at the Department of Energy’s Pacific Northwest National Laboratory have co-designed with Micron a new hardware-software architecture purpose-built for science.

Cadence Introduces Industry-First LPDDR6/5X 14.4Gbps Memory IP to Power Next-Generation AI Infrastructure

07/10/2025 | Cadence Design SystemsCadence announced the tapeout of the industry’s first LPDDR6/5X memory IP system solution optimized to operate at 14.4Gbps, up to 50% faster than the previous generation of LPDDR DRAM.

NVIDIA RTX PRO 6000 Shipments Expected to Rise Amid Market Uncertainties

06/24/2025 | TrendForceThe NVIDIA RTX PRO 6000 has recently generated significant buzz in the market, with expectations running high for strong shipment performance driven by solid demand.

President Trump Secures $200B Investment from Micron Technology for Memory Chip Manufacturing in the United States

06/16/2025 | U.S. Department of CommerceThe Department of Commerce announced that Micron Technology, Inc., the leading American semiconductor memory company, plans to invest $200 billion in semiconductor manufacturing and R&D to dramatically expand American memory chip production.